Linear Regression is one of the easiest algorithms in machine learning. In this post we will explore this algorithm and we will implement it using Python from scratch.

As the name suggests this algorithm is applicable for Regression problems. Linear Regression is a Linear Model. Which means, we will establish a linear relationship between the input variables(X) and single output variable(Y). When the input(X) is a single variable this model is called Simple Linear Regression and when there are mutiple input variables(X), it is called Multiple Linear Regression.

Simple Linear Regression

We discussed that Linear Regression is a simple model. Simple Linear Regression is the simplest model in machine learning.

Model Representation

In this problem we have an input variable - X and one output variable - Y. And we want to build linear relationship between these variables. Here the input variable is called Independent Variable and the output variable is called Dependent Variable. We can define this linear relationship as follows:

The β1 is called a scale factor or coefficient and β0 is called bias coefficient. The bias coeffient gives an extra degree of freedom to this model. This equation is similar to the line equation y=mx+b with m=β1(Slope) and b=β0(Intercept). So in this Simple Linear Regression model we want to draw a line between X and Y which estimates the relationship between X and Y.

But how do we find these coefficients? That’s the learning procedure. We can find these using different approaches. One is called Ordinary Least Square Method and other one is called Gradient Descent Approach. We will use Ordinary Least Square Method in Simple Linear Regression and Gradient Descent Approach in Multiple Linear Regression in post.

Ordinary Least Square Method

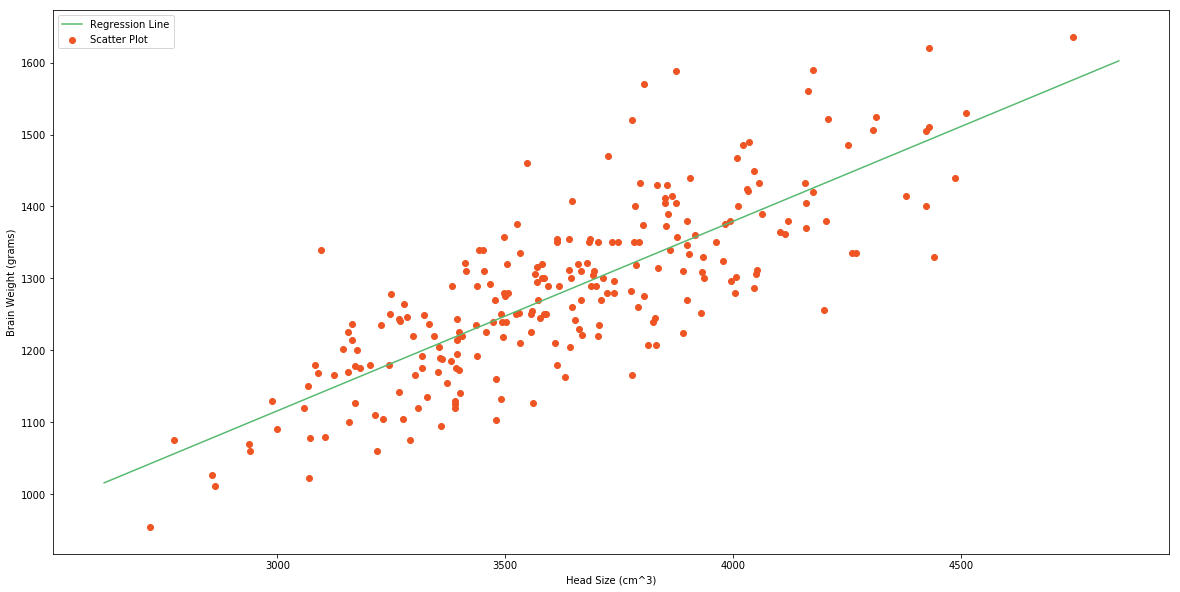

Earlier in this post we discussed that we are going to approximate the relationship between X and Y to a line. Let’s say we have few inputs and outputs. And we plot these scatter points in 2D space, we will get something like the following image.

And you can see a line in the image. That’s what we are going to accomplish. And we want to minimize the error of our model. A good model will always have least error. We can find this line by reducing the error. The error of each point is the distance between line and that point. This is illustrated as follows.

And total error of this model is the sum of all errors of each point. ie.

-Distance between line and ith point.

- Total number of points

You might have noticed that we are squaring each of the distances. This is because, some points will be above the line and some points will be below the line. We can minimize the error in the model by minimizing E. And after the mathematics of minimizing E, we will get:

In these equations is the mean value of input variable X and is the mean value of output variable Y.

Now we have the model. This method is called Ordinary Least Square Method. Now we will implement this model in Python.

Implementation

We are going to use a dataset containing head size and brain weight of different people. This data set has other features. But, we will not use them in this model.. This dataset is available here in Github repo in csv file. Let’s start off by importing the data.

#matplotlib inline for showing graphs in notebook as inline result

%matplotlib inline

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

#for setting size of figures

plt.rcParams['figure.figsize']=(20.0,10.0)

#Reading Data

data = pd.read_csv('headbrain.csv')

#print dimensions

print(data.shape)

#take a look of data frame

data.head()

(237, 4)

| Gender | Age Range | Head Size(cm^3) | Brain Weight(grams) | |

|---|---|---|---|---|

| 0 | 1 | 1 | 4512 | 1530 |

| 1 | 1 | 1 | 3738 | 1297 |

| 2 | 1 | 1 | 4261 | 1335 |

| 3 | 1 | 1 | 3777 | 1282 |

| 4 | 1 | 1 | 4177 | 1590 |

As you can see there are 237 values in the training set. We will find a linear relationship between Head Size and Brain Weights. So, now we will get these variables.

#intialization of independent and dependent variable vectors

X=data['Head Size(cm^3)'].values

Y=data['Brain Weight(grams)'].values

To find the values β1 and β0, we will need mean of X and Y. We will find these and the coeffients.

# Mean of X and Y

mean_X = np.mean(X)

mean_Y = np.mean(Y)

# Total number of values

m = len(X)

# Using the formula to calculate b0 and b1

numerator = 0

denominator = 0

for i in range(m):

numerator += (X[i] - mean_X) * (Y[i] - mean_Y)

denominator += (X[i] - mean_X) * (X[i] - mean_X)

b1 = numerator/denominator

b0 = mean_Y - (b1 * mean_X)

# Print coefficients (Also called weights)

print(b1,b0)

0.26342933948939945 325.57342104944223

There we have our coefficients.

That is our linear model.

Now we will see this graphically.

# Plotting X-Y graph and regression line

max_X=np.max(X)+100

min_X=np.min(X)-100

#Calculating line values x and y

#linspace() Return evenly spaced numbers over a specified interval

x = np.linspace(min_X,max_X,1000)

y = b0 + b1 * x

# Ploting Line

plt.plot(x, y, color='#58b970', label='Regression Line')

# Ploting Scatter Points

plt.scatter(X, Y, c='#ef5423', label='Scatter Plot')

plt.xlabel('Head Size (cm^3)')

plt.ylabel('Brain Weight (grams)')

plt.legend()

plt.show()

This model is not so bad. But we need to find how good is our model. There are many methods to evaluate models. We will use Root Mean Squared Error and Coefficient of Determination( Score).

Root Mean Squared Error is the square root of sum of all errors divided by number of values, or Mathematically,

Here is the ith predicted output values. Now we will find RMSE.

#Finding how is our model calculating root mean square error

# lower the rmse value to 1 better the regression model (infact rmse is minimum here)

rmse = 0

for i in range(m):

y_pred = (Y[i]-(b0 + b1*X[i]))

rmse += (y_pred * y_pred)

#Root mean square error

rmse=np.sqrt(rmse/m)

print(rmse)

72.1206213783709

Now we will find score. is defined as follows,

is the total sum of squares and is the total sum of squares of residuals.

Score usually range from 0 to 1. It will also become negative if the model is completely wrong. Now we will find Score.

# SS_t is the total sum of squares and SS_r is the total sum of squares of residuals.

# closer the r^2 value to 1 better the regression model

ss_t = 0

ss_r = 0

for i in range(m):

y_pred = b0 + b1 * X[i]

ss_t += (Y[i] - mean_Y) ** 2

ss_r += (Y[i] - y_pred) ** 2

r2 = 1 - (ss_r/ss_t)

print(r2)

0.6393117199570003

0.63 is not so bad. Now we have implemented Simple Linear Regression Model using Ordinary Least Square Method. Now we will see how to implement the same model using a Machine Learning Library called scikit-learn

The scikit-learn approach

scikit-learn is simple machine learning library in Python. Building Machine Learning models are very easy using scikit-learn. Let’s see how we can build this Simple Linear Regression Model using scikit-learn.

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

m=len(X)

# Cannot use Rank 1 matrix in scikit learn

X = X.reshape((m, 1))

# Creating Model

reg = LinearRegression()

# Fitting training data

reg = reg.fit(X, Y)

# Y Prediction

Y_pred = reg.predict(X)

# Calculating RMSE and R2 Score

mse = mean_squared_error(Y, Y_pred)

rmse = np.sqrt(mse)

r2_score = reg.score(X, Y)

# Compare these results with simpleL_R results(our linear regression model)

print(np.sqrt(mse))

print(r2_score)

72.1206213783709

0.639311719957

You can see that this exactly equal to model we built from scratch, but simpler and less code.

Let we move on to Multiple Linear Regression

Multiple Linear Regression

Multiple Linear Regression is a type of Linear Regression when the input has multiple features(variables).

Model Representation

Similar to Simple Linear Regression, we have input variable(X) and output variable(Y). But the input variable has n features. Therefore, we can represent this linear model as follows;

is the ith feature in input variable. By introducing =1, we can rewrite this equation.

Now we can convert this eqaution to matrix form.

Where,

and

We have to define the cost of the model. Cost bascially gives the error in our model. Y in above equation is the our hypothesis(approximation). We are going to define it as our hypothesis function.

And the cost is,

By minimizing this cost function, we can get find β. We use Gradient Descent for this.

Gradient Descent

Gradient Descent is an optimization algorithm. We will optimize our cost function using Gradient Descent Algorithm.

Step 1

Initialize values , ,…, with some value. In this case we will initialize with 0.

Step 2

Iteratively update,

until it converges.

This is the procedure. Here is the learning rate. This operation means we are finding partial derivate of cost with respect to each . This is called Gradient.

Read this if you are unfamiliar with partial derivatives.

In step 2 we are changing the values of in a direction in which it reduces our cost function. And Gradient gives the direction in which we want to move. Finally we will reach the minima of our cost function. But we don’t want to change values of drastically, because we might miss the minima. That’s why we need learning rate.

The above animation illustrates the Gradient Descent method.

But we still didn’t find the value of . After we applying the mathematics. The step 2 becomes.

We iteratively change values of according to above equation. This particular method is called Batch Gradient Descent.

Implementation

Let’s try to implement this in Python. This looks like a long procedure. But the implementation is comparitively easy since we will vectorize all the equations. If you are unfamiliar with vectorization, read this post